IoTLab students and associated faculty have access to the following computational HPC resources in the Computational Science Data Center. Students have also participated in the International Conference for High Performance Computing, Networking, Storage, and Analysis Student Cluster Competition.

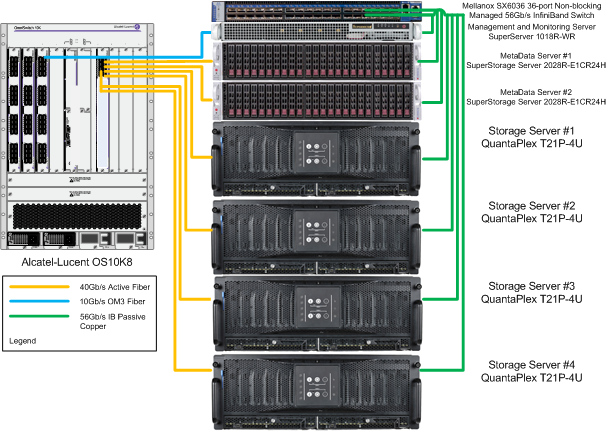

beehive

Beehive is a petascale network-accessible storage system capable of parallel I/O for use in HPC numerical simulations and data acquisition processes related to research primarily in climate science. The BeeGFS storage cluster was obtained through NSF Office of Advanced Cyberinfrastructure (OAC) CC* Storage Grant 1659169 ($199,998) titled Implementation of a Distributed, Shareable, and Parallel Storage Resource at San Diego State University to Facilitate High-Performance Computing for Climate Science. Beehive has a capacity of 2.4PB and consists of two metadata servers (SuperMicro SuperStorage Server 2028R-E1CR24H) and four object storage servers (QCT 4U 78 Bays High Density Storage Server 1S2PZZZ0007) and contains a total of 4 x 78 x 8TB HDDs. The storage cluster supports MPI-IO, parallel NetCDF, and parallel HDF5 to improve run-time performance of I/O bound computations. Beehive resides on the SDSU NSF-funded Science DMZ (“SDMZ”) network to facilitate high-speed (10/40/100 Gbps) data transfer using Globus. A petascale and parallel storage system provides needed capacity to accommodate potentially petabyte-sized output files generated from coastal ocean modeling, numerical geologic CO2 sequestration, multiphase turbulent combustion, and real-time CO2 and CH4 data acquisition, and facilitates parallel I/O to improve the run-time performance of I/O bound processes, where the run-time is typically a function of grid resolution (mesh size) and the number of 4D temporal-spatial computed scalar, vector, and tensor fields to be saved to disk. Beehive uses the BeeGFS parallel file system, which is developed at the Fraunhofer Institute for industrial mathematics. The BeeGFS Parallel File System cluster provides scalable, high-throughput, low-latency, parallel I/O to network accessible HPC clusters at San Diego State University used for climate science related numerical simulations and enables scientific discovery through improved run-time performance of I/O intensive workloads. With BeeGFS, I/O throughput scales linearly to a sustained throughput of 25 GB/s. BeeGFS client drivers are published under the GPL and the server application is covered by the Fraunhofer EULA.

notos

notos.sdsu.edu is a Supermicro SuperServer 4028GR-TR GPU cluster optimized for AI, deep learning, and/or HPC applications. notos.sdsu.edu features 8x Nvidia Tesla V100-PCIe GPUs and has the TensorFlow, TensorFlow Lite, Caffe, and Keras deep learning frameworks installed.

mixcoatl

mixcoatl.sdsu.edu is a College of Engineering owned Linux cluster and SDMZ data transfer node (DTN) running CentOS release 6.5 that resides in Engineering server room room 207B. Mixcoatl is a Dell PowerEdge M1000e blade enclosure with 16 Dell PowerEdge M620 compute nodes, where each node has two Intel Xeon E5-2680 (2.70GHz, 346 GFLOPs) octa-core CPUs, yielding a total core count of 256. Each node has 128 GB of physical memory, yielding a total physical memory capacity of approximately 2TB. Local scratch storage is provided by two 100GB SSD HDDs/node. Network connectivity to the Science DMZ is 10 Gbps Ethernet, while inter-blade connectivity is via 4x Fourteen Data Rate (FDR) InfiniBand (54.54 Gbps) Mellanox Connect X3 PCI mezzanine cards. Mixcoatl is used for running carbon capture, utilization, and geologic sequestration codes, ANSYS Fluent, ANSYS HFSS, SIMULIA ABAQUS, OpenFOAM, ConvergeCFD, MATLAB, and the HIT3DP pseudospectral DNS code. Theoretical TFLOPs = 88.58.

marconi

marconi.sdsu.edu is a College of Engineering owned Dell PowerEdge R900 server with 132GB physical memory. This server is used for running ns-3 discrete event network simulations, hosting Java web applications, and serves as a LoRaWAN application server.

NVIDIA DGX A100 dgx.sdsu.edu

Funded under NSF Office of Advanced Cyberinfrastructure (OAC) CC* Compute Grant 2019194 ($399,328) CC* Compute: Central Computing with Advanced Implementation at San Diego State University, the DGX A100 provides 8x NVIDIA A100 40 GB GPUs for accelerated and deep-learning applications. Additional system specifications include 320 GB total GPU memory, 1TB system memory, and dual AMD Rome 7742 (128 total cores) CPUs.

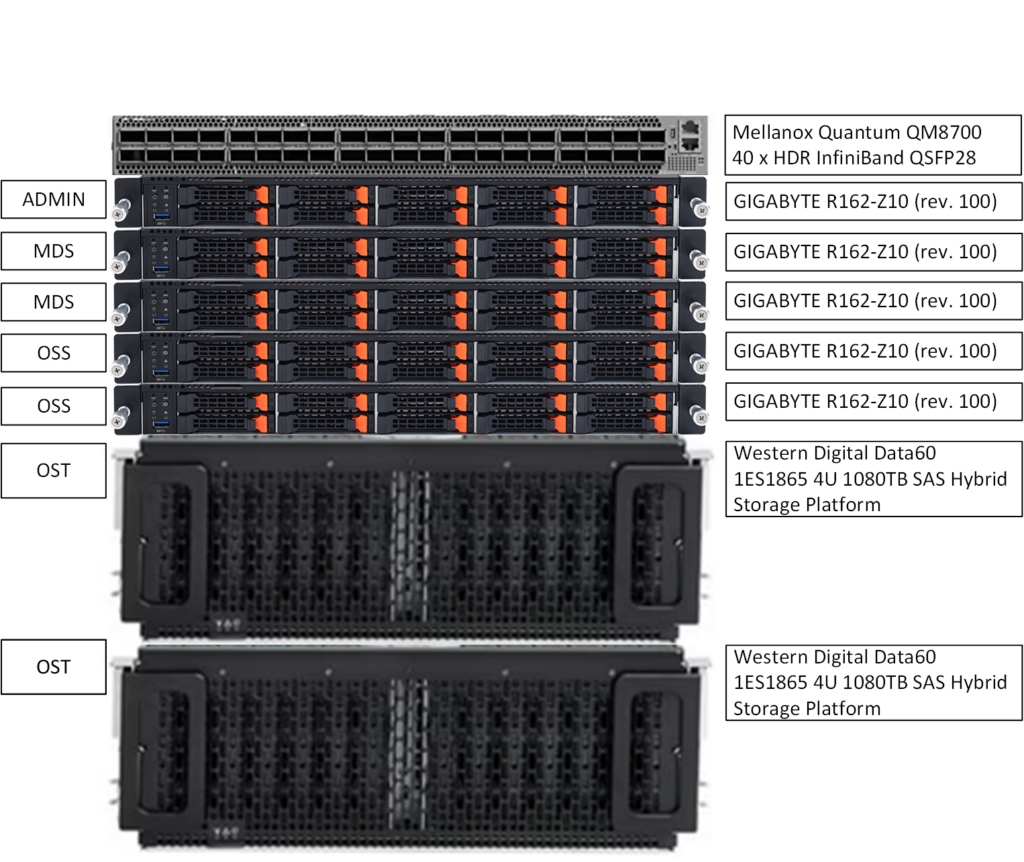

beekeeper

alveo.sdsu.edu

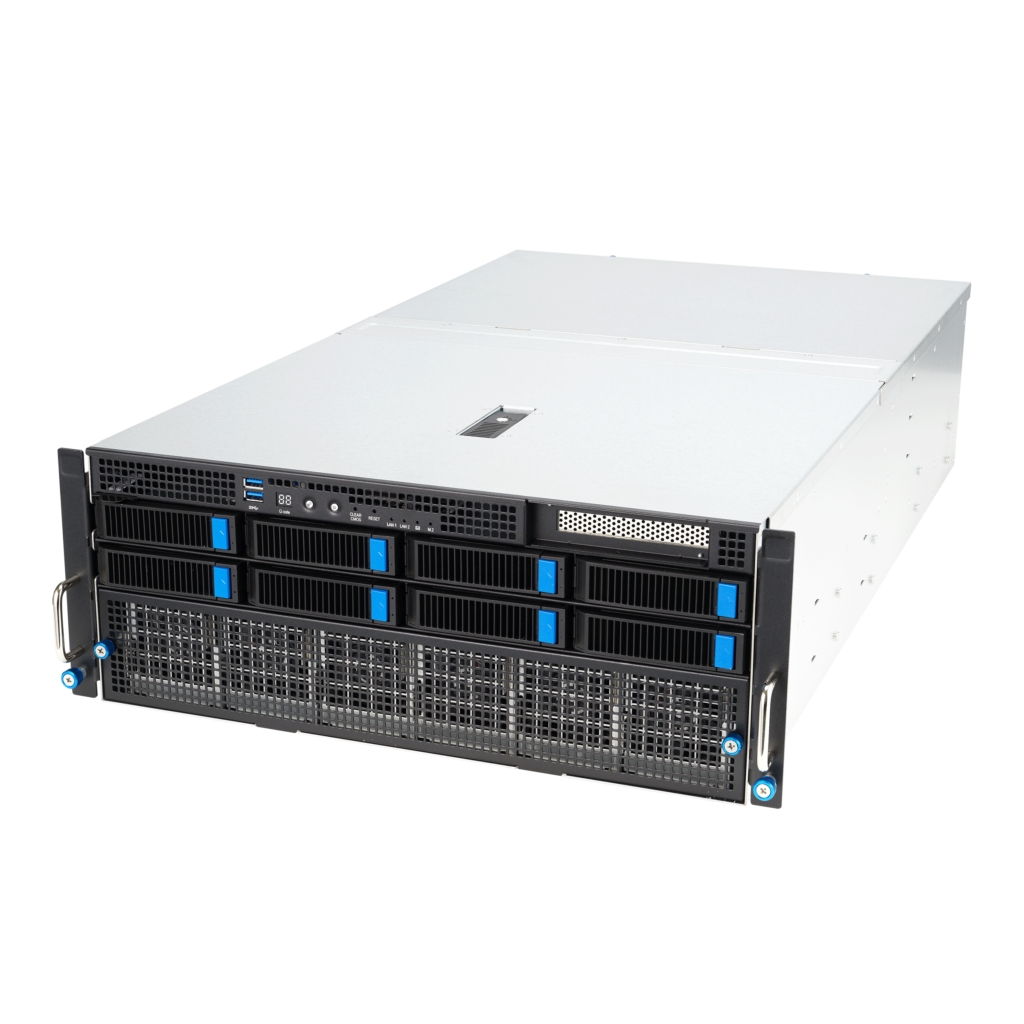

Gigabyte G482-Z54 (rev. 100)

Funded under NSF Office of Advanced Cyberinfrastructure (OAC) CC* Compute Grant 2019194 ($399,328) CC* Compute: Central Computing with Advanced Implementation at San Diego State University, the Gigabyte G482-Z54 HPC Server is a 4U DP 8 x Gen4 GPU Server that is a node in the NRP Kubernetes cluster. This node hosts a Xilinx Alveo SN1000 SmartNIC.

aptd.sdsu.edu

Gigabyte R282-Z93 (rev. 100) rackmount server, 2U, 12x Hot-Swap Bays. Contains a Xilinx Alveo U280 data center accelerator board. Funded under Office of Naval Research (ONR) Award N00014-21-1-2023, Training Navy ROTC students in the Development and Deployment of FPGA Accelerated Network Intrusion Detection Systems for Identifying Indicators of Cyber Attack, $249,999.

mantis.sdsu.edu

Nodes A-D

Gigabyte H273-Z82-AAW1 rackmount server, 2U, 4x nodes, 2x AMD EPYC 9654 96-Core Processors/node, 1.5TB physical memory/node.

mantis.sdsu.edu

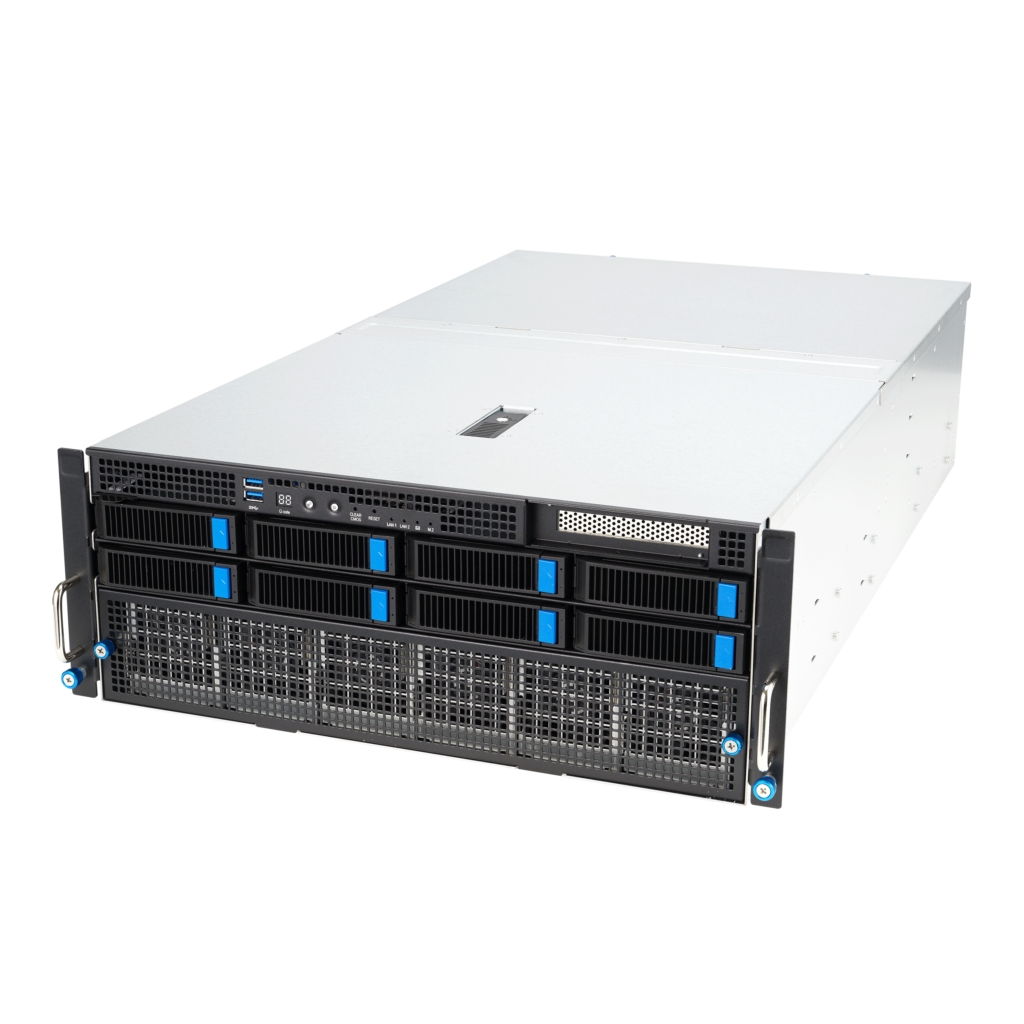

Node E

ASUSTeK COMPUTER INC. ESC8000A-E12, 4U, 1x node, 2x AMD EPYC 9654 96-Core Processors, 4x Instinct MI210 GPUs, 755GB physical memory.

mantis.sdsu.edu

Node F

Gigabyte MZ73-LM0-000, 4U, 1x node, 2x AMD EPYC 9754 128-Core Processor, 1x NVIDIA RTX A6000 GPU, 1.5TB physical memory.